🔴 Website 👉 https://u-s-news.com/

Telegram 👉 https://t.me/usnewscom_channel

If you use ChatGPT much, you’ve likely experimented with DALL-E 3, OpenAI’s latest iteration of an AI image generator. DALL-E 3 can be shockingly good at bringing your ideas to life but sometimes shockingly bad at understanding certain details or just shocking in what it chooses to emphasize. Still, if you want to see a specific scene move from your head to your screen, DALL-E 3 is usually pretty helpful, it can even make hands write.

But here’s the thing, DALL-E 3 is still an AI model, not a mind reader. If you want images that actually look like what you’re imagining, you need to learn how to speak its language.

After some trial and error (and a few unintentional horrors), I’ve become fairly adept at speaking its language. So here are five key tips that will help you do the same.

Polish in HD

DALL-E 3 has a quality mode called ‘hd,’ which makes images look sharper and more detailed. Think of it like adding a high-end camera lens to your AI-generated art – textures look richer, lighting is more refined, and things like fabric, fur, and reflections have more depth.

Check out how it looks in this prompt: “A rendering of a close-up shot of a butterfly resting on a sunflower. Quality: hd.”

Play with aspect ratio

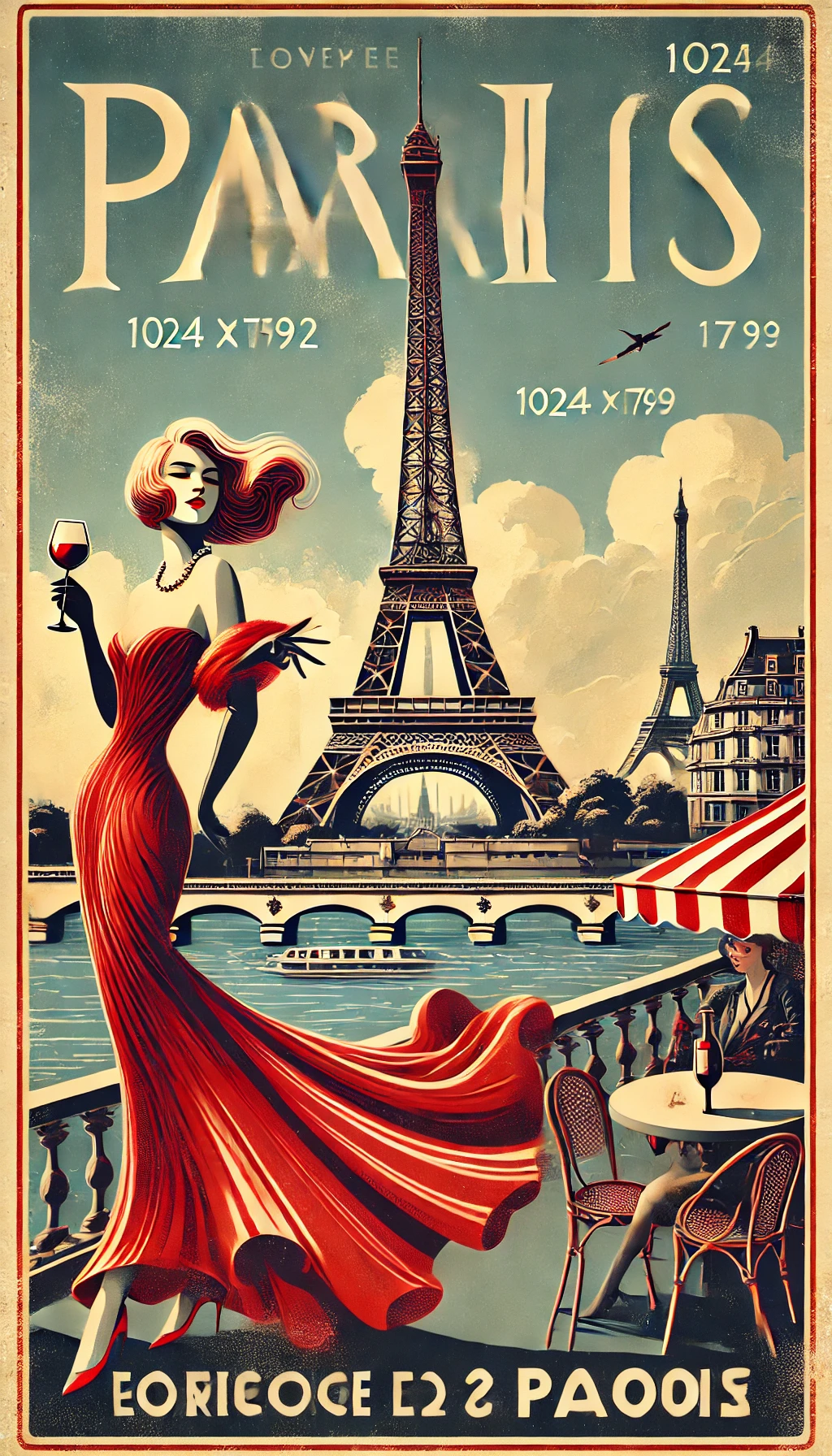

Not every image has to be a square. DALL-E 3 lets you set the aspect ratios, which may seem minor but can be huge if you want to make something look more cinematic or like a portrait.

Square is fine, but why limit yourself when you can make something epic? This is especially useful for social media graphics and posters, like the one below, which had the prompt: “A vertical poster of a vintage travel advertisement for Paris, size 1024×1792 pixels.”

Think like a film director

To get an image capable of evoking emotion, sometimes it helps to think like you’re a photographer in the real world. Think about camera angle or composition techniques; look them up if necessary. The result can dramatically change how an image looks.

Instead of a flat, dead-on image, you can request angles like close-up, bird’s-eye view, or over-the-shoulder. The same goes for composition styles and terms like ‘symmetrical composition’ or ‘depth of field.’

That’s how you can get the following image from this prompt: “A dramatic over-the-shoulder shot of a lone cowboy standing on a rocky cliff, gazing at the vast desert landscape below. The sun sets in the distance, casting long shadows across the canyon. The cowboy’s silhouette is sharp against the golden sky, evoking a cinematic Western feel.”

Iterate, iterate, iterate

One of DALL-E 3’s lesser-known but highly effective tricks is telling it what not to include. This helps avoid unwanted elements in your image. That might mean specifying negative elements like colors, objects, or styles you don’t want or refining the style and mood by what you don’t want it to feel like.

That’s how I got the image below, using the prompt: “A peaceful park in autumn with a young woman sitting on a wooden bench, reading a book. Golden leaves cover the ground, and a soft breeze rustles the trees. No other people, no litter, just a quiet, serene moment in nature.”

Be overly specific

Think of DALL-E 3 as a very literal genie: it gives you exactly what you ask for, no more, no less. So if you type in “a dog,” don’t be surprised when it spits out a random dog of indeterminate breed, vibe, or moral alignment. The more details you include – like breed, color, setting, mood, or even art style – the better the results.

As an example, You might start with: “A wizard casting a spell,” but you’d be better off submitting: “An elderly wizard with a long, braided white beard, dressed in emerald-green robes embroidered with gold runes, conjuring a swirling vortex of blue lightning from his fingertips in a stormy mountain landscape.” You can see both below.